- What are Python Libraries?

- 10 Best Python Libraries for Machine Learning Development: A Complete Overview

- How to Choose the Right Python Machine Learning Library

- Build Smarter Machine Learning Solutions with Space O AI

- Frequently Asked Questions on Python Libraries for Machine Learning

- Which Python library should I learn first?

- Can I use multiple Python machine learning libraries in one project?

- Which library handles the largest datasets best?

- Are all Python machine learning libraries free?

- Should I use a local library or a cloud-based AI service?

- What’s the difference between Scikit-learn, XGBoost, and LightGBM?

Python Libraries for Machine Learning: A Practical Guide for Developers 2026

Python has become the most popular programming language for machine learning because it offers a simple syntax, a massive community, and a rich ecosystem of powerful libraries. Whether you are building your first prediction model or working on a production-level ML system, Python gives you access to tools that simplify data processing, model development, training, evaluation, and deployment.

The challenge is that there are hundreds of libraries available today, each built for a specific purpose. Some focus on numerical computing, some on classical machine learning, some on deep learning, and others on visualization or NLP. This makes it difficult for beginners and even experienced developers to decide which tools are right for their project.

With our 15+ years of experience in offering machine learning development services, we understand the ned for selecting the right libraries nd technologies to build powerful ML solutions. Through this experience, we’ve learned what truly matters when choosing the right tool.

This blog gives you a clear and comprehensive overview of the best Python libraries for machine learning. You will learn what each library does, the problems it solves, and when you should use it. By the end, you will have a strong understanding of the core tools that power modern machine learning workflows.

What are Python Libraries?

Python libraries are collections of pre-written code that help you perform specific tasks without having to build everything from scratch. In the context of machine learning, these libraries provide ready-to-use functions for data processing, mathematical operations, model building, visualization, and more.

Instead of writing complex algorithms on your own, you can rely on these libraries to speed up development and reduce errors. Python libraries make machine learning development more accessible, efficient, and scalable. They give developers a strong foundation so they can focus on solving real-world problems rather than reinventing the core mathematical and computational tools required to build ML models.

Key characteristics of good libraries

The best Python machine learning libraries share several important characteristics:

- Documentation and Community: First, they have extensive documentation and active community support. When you’re stuck, you need help. Libraries like scikit-learn have communities of thousands where you can find answers to almost any question.

- Active Maintenance: Second, they’re actively maintained. The developers keep updating them with bug fixes, performance improvements, and new features as the field evolves.

- Performance Optimization: Third, they’re optimized for speed. Many libraries use compiled backends (like C or CUDA) under the hood, so even though you’re writing Python, your code runs incredibly fast.

- Seamless Integration: Finally, they work together. The best practice in Python for machine learning is actually using multiple libraries in sequence. Your data preparation uses one library, your model building uses another, and your visualization uses yet another. The fact that these tools are designed to integrate seamlessly with each other is part of what makes Python and machine learning combinations so powerful.

Most machine learning with Python projects don’t use just one library. They use three, four, or even more libraries working together in a carefully orchestrated pipeline. Understanding how these libraries complement each other is essential to building effective machine learning systems.

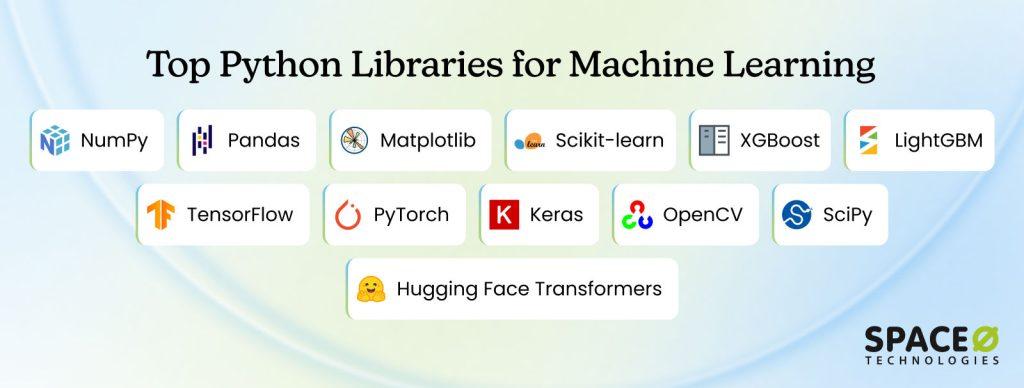

10 Best Python Libraries for Machine Learning Development: A Complete Overview

When we talk about the best Python libraries for machine learning, we’re really talking about libraries that handle different parts of your machine learning workflow. Some are foundation layers that everything else builds on. Others are specialized for specific tasks. Let’s take a detailed look at each of them:

1. Data preparation and foundation libraries

These are the tools you’ll use first in almost every machine learning project. You need to load your data, explore it, clean it, and prepare it before you can even think about building models.

1.1 NumPy

Best For: Foundation for all numerical computing; used by almost every other library.

NumPy is the foundation of the entire Python machine learning ecosystem. You won’t build a machine learning model directly with NumPy. But almost every other library you use builds on top of NumPy.

The real power of NumPy is its speed. Operations that would take minutes in pure Python take milliseconds in NumPy because it uses compiled C code underneath.

What It Does: Handles multi-dimensional arrays and mathematical operations on those arrays.

Key Strength: Lightning-fast performance on large datasets through compiled C backend.

Common Use: Storing and manipulating datasets with thousands of examples and hundreds of features.

When to Use: Always. NumPy is used internally by Pandas, Scikit-learn, TensorFlow, and PyTorch. You rarely import it directly for end-user tasks, but it powers everything behind the scenes.

1.2 Pandas

Best For: Loading, exploring, and preparing data before modeling.Key Strength: Handles missing values, removes duplicates, merges datasets, and groups data with intuitive syntax.

If NumPy is the foundation, Pandas is the first tool you’ll actually interact with. Pandas gives you DataFrames, which are like spreadsheets in Python. You load your CSV files into Pandas, and suddenly you can explore your data like you would in Excel, but with far more power.

Most data scientists spend 70% of their time in Pandas, cleaning and preparing data. The reason is simple: garbage in, garbage out. Your model is only as good as your data.

What It Does: Provides DataFrames for data manipulation, cleaning, and preprocessing.

Common Use: Loading CSV files, removing duplicates, feature engineering, and handling time series data.

When to Use: Start every machine learning project with Python here. Use Pandas before you touch any machine learning algorithm. You’ll spend most of your time in Pandas getting data ready.

1.3 Matplotlib and Seaborn

Best For: Data exploration and visual analysis.

You can’t understand your data if you can’t see it. Matplotlib is the foundational visualization library for Python. It’s powerful but sometimes verbose to use. Seaborn builds on top of Matplotlib and makes beautiful visualizations much easier. These aren’t strictly machine learning tools, but they’re essential for any serious work in machine learning with Python.

What It Does: Creates visualizations, plots, charts, and graphs.

Key Strength: Publication-quality visualizations with seamless Pandas integration.

Common Use: Exploratory data analysis, visualizing model results, and creating reports.

When to Use: During data exploration to spot patterns and outliers, and when communicating results to stakeholders who need to understand your findings visually.

2. Classical machine learning libraries

Classical machine learning includes various ML approaches like decision trees, logistic regression, k-means clustering, and support vector machines. These algorithms have been around for decades, but they’re still incredibly powerful for many real-world problems.

2.1 Scikit-learn

Best For: Tabular data and traditional ML models on structured data.

Scikit-learn is where most people begin their Python machine learning libraries journey. It’s the gold standard for classical machine learning algorithms. What makes scikit-learn special is consistency. Once you learn the syntax for one algorithm, you understand the syntax for all of them.

If you’re working with structured data (spreadsheets, CSVs, databases), scikit-learn should be your first choice. Most importantly, scikit-learn models are interpretable. You can actually understand why your model made a prediction, which is critical for many business applications.

What It Does: Implements classical machine learning algorithms (classification, regression, clustering).

Key Strength: Consistent API across all algorithms, excellent documentation, and highly interpretable models.

Common Use: Decision trees, random forests, SVM, k-means clustering, logistic regression.

When to Use: When working with structured, tabular data (CSV files, databases). Use this as your first choice before trying anything more complex. It’s the fastest way to get a working model and understand whether your problem is solvable with machine learning.

2.2 XGBoost

Best For: Competition-winning models on tabular data.

XGBoost is gradient boosting, which is a technique that combines many weak models into one strong model. If you look at winning solutions in machine learning competitions like Kaggle, XGBoost appears frequently in them.

This isn’t by accident. XGBoost is incredibly effective on tabular data. The trade-off is that XGBoost takes longer to train than simpler models, and it’s less interpretable. But when accuracy is critical, the results speak for themselves.

What It Does: Implements gradient boosting for high-accuracy predictions.

Key Strength: Extreme accuracy, built-in handling of missing values, and fast training.

Common Use: Kaggle competitions, fraud detection, and credit scoring.

When to Use: When Scikit-learn isn’t giving you the accuracy you need on tabular data. XGBoost’s advanced algorithm typically delivers better accuracy than simpler models on structured datasets.

2.3 LightGBM

Best For: Large datasets where speed and memory efficiency matter.

LightGBM is another gradient boosting library, but it’s optimized for speed. When XGBoost takes a long time to train, LightGBM completes faster. For large datasets with millions of rows, this speed advantage is transformative. LightGBM uses slightly less memory than XGBoost, which matters when your dataset is huge.

What It Does: Implements fast gradient boosting optimized for speed and efficiency.

Key Strength: Faster than XGBoost with a lower memory footprint.

Common Use: Real-time scoring systems, large-scale predictions with millions of rows.

When to Use: When you have large datasets and need training time to be reasonable. Also use LightGBM if you’re deploying a model that needs to make predictions in real-time with minimal latency.

3. Deep learning frameworks

Deep learning means neural networks. These are powerful but require more data, more compute, and more patience to get working. But for certain problems like image recognition, natural language processing, and time series forecasting, deep learning is the right tool.

3.1 TensorFlow

Best For: Production-grade deep learning and large-scale deployment.

TensorFlow is the most production-ready deep learning framework. Built by Google, it’s battle-tested at massive scale. If you want to build a deep learning system that serves millions of users, TensorFlow is the natural choice.

TensorFlow’s strength is its ecosystem. It has tools for everything: training, optimization, deployment, mobile inference, and edge computing. The downside is that TensorFlow has a steeper learning curve than some alternatives. The code can feel verbose.

What It Does: Builds and trains deep neural networks at scale.

Key Strength: Scalability, GPU/TPU support, and deployment on any platform (mobile, cloud, edge).

Common Use: Image classification, object detection, NLP models, production inference.

When to Use: When you need to deploy models to production at scale, across multiple devices, or require the model to run on mobile phones. TensorFlow’s ecosystem is mature and battle-tested in enterprise environments.

3.2 PyTorch

Best For: Research, rapid prototyping, and custom architectures.

PyTorch is the deep learning framework beloved by researchers. It has a more intuitive, Pythonic API than TensorFlow. When you’re trying new ideas and need to iterate quickly, PyTorch feels more natural.

Most cutting-edge machine learning research uses PyTorch. The community is incredibly active. The trade-off is deployment. PyTorch’s production infrastructure is less mature than TensorFlow’s, though it’s catching up quickly.

What It Does: Implements deep learning with dynamic computation graphs.

Key Strength: Intuitive Pythonic API, easy debugging, and dynamic computation graphs.

Common Use: Transformer models, GANs, custom loss functions, cutting-edge research.

When to Use: When you’re experimenting with new model architectures or doing research. PyTorch’s dynamic nature makes it easier to debug and iterate quickly. It’s widely used in academic machine learning research.

3.3 Keras

Best For: Rapid prototyping and learning deep learning.

Keras sits on top of TensorFlow and simplifies building neural networks dramatically. If you’re new to deep learning, Keras is the gentlest introduction. You can build a neural network with just a few lines of code.

The simplicity comes with less flexibility. For standard architectures, Keras is perfect. For custom, novel architectures, you might hit their limitations.

What It Does: Provides a high-level API for building neural networks.

Key Strength: Simplest API for neural networks with beginner-friendly syntax.

Common Use: Getting started with deep learning, simple sequential architectures.

When to Use: When you’re learning deep learning for the first time or need to quickly prototype a standard neural network. Keras abstracts away complexity but sacrifices flexibility for custom architectures.

4. Specialized libraries

Some problems need specialized tools. Natural language processing, computer vision, reinforcement learning, and hyperparameter optimization. These are solved more effectively by libraries built specifically for those domains.

4.1 OpenCV

Best For: Image and video processing tasks.

OpenCV is a computer vision library. It handles image loading, preprocessing, feature detection, and tons of other operations you need for any vision task. If you’re building anything with images or video, you’ll probably use OpenCV for preprocessing before feeding data into a deep learning model.

What It Does: Handles computer vision operations and image processing.

Key Strength: Extensive image processing functions with GPU acceleration.

Common Use: Image preprocessing, feature detection, video analysis.

When to Use: When you need to prepare images or videos for deep learning models. Use OpenCV for resizing, rotating, filtering, and extracting features before feeding data into your neural network.

4.2 Hugging Face Transformers

Best For: Natural language processing and text tasks.

Transformer models have revolutionized natural language processing. Hugging Face maintains an enormous library of pre-trained transformer models. For almost any NLP task, you can find a pre-trained model that already understands language in sophisticated ways.

You just fine-tune it for your specific problem. This library saves significant development time. The Python libraries landscape would look completely different without this library.

What It Does: Provides pre-trained transformer models and transfer learning.

Key Strength: Extensive library of pre-trained models with a simple API for fine-tuning.

Common Use: These models are also highly effective for named entity recognition tasks, where identifying people, organizations, and locations in text is crucial. For domain-specific NER applications, explore various NER models that can be fine-tuned for your use case

When to Use: For any NLP task where you need strong performance. Pre-trained models already understand language deeply, so you only need to fine-tune them for your specific task. Training from scratch would require significant computing and data resources.

Deciding how much fine-tuning your language models need is part of a broader machine learning model selection strategy that balances performance against development timelines and resource constraints

4.3 SciPy

Best For: Statistical analysis and mathematical operations.

SciPy provides scientific computing functions. Statistical tests, optimization algorithms, and linear algebra operations that are more specialized than what NumPy offers. For many machine learning projects, SciPy provides tools for preprocessing and analysis.

What It Does: Provides scientific computing functions and optimization.

Key Strength: Comprehensive scientific functions, optimization algorithms, and linear algebra.

Common Use: Statistical tests, optimization problems, signal processing.

When to Use: When you need specialized mathematical functions beyond NumPy. Use SciPy for statistical hypothesis testing, optimization algorithms, or signal processing tasks in your machine learning pipeline.

Now that you understand what’s available, how do you actually decide? With so many Python ML libraries to choose from, the decision can feel overwhelming. The good news is that the choice becomes much clearer when you think about your specific problem systematically.

Let’s walk through a practical framework that will help you narrow down from dozens of options to the exact tools you need.

Get Expert Guidance on Selecting the Right Python Libraries

Work with Space-O AI to choose the best Python tools for your machine learning project. Our team helps you evaluate options, plan the right tech stack, and build high-performance ML solutions.

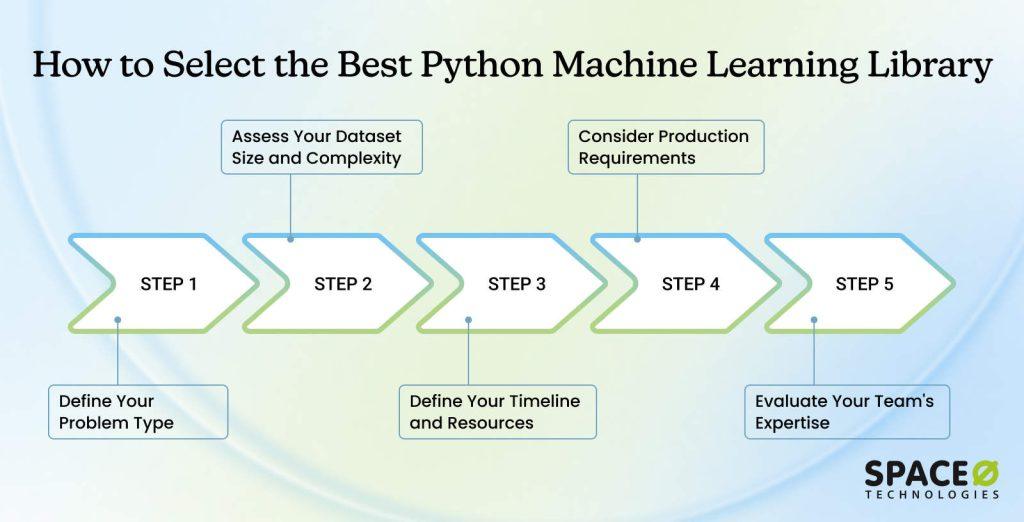

How to Choose the Right Python Machine Learning Library

Knowing what each library does is one thing. Choosing the right one for your specific project is another. Every situation is unique. Your timeline, budget, team expertise, and data requirements are different. Use this five-step framework to find which Python ML libraries actually fit your project’s ML tech stack.

Step 1: Define your problem type

Understanding what you’re trying to solve is the foundation of everything that follows. Different top Python libraries excel at different types of problems, and choosing the right tool for your specific challenge determines everything downstream.

Before you write a single line of code, take time to articulate what you’re trying to accomplish clearly. Are you predicting a number? Classifying items? Finding patterns in data? The answer shapes your entire library strategy.

Action items

- Write down your specific prediction goal (e.g., “predict house prices” or “classify emails as spam”)

- Identify your data type: structured tables, images, text, time series, or a combination

- Determine if you need interpretability (understanding why the model made a decision) or just accuracy

- Check if you have domain expertise or need off-the-shelf solutions

- List any constraints: speed requirements, hardware limitations, or regulatory compliance needs

Step 2: Assess your dataset size and complexity

The volume and structure of your data directly impact which machine learning libraries Python tools you should use. A dataset with a few thousand rows behaves very differently from one with billions of records.

Similarly, simple structured data requires different approaches than complex, unstructured data like images or text. Understanding your data landscape helps you eliminate libraries that are either overkill or insufficient for your needs.

Action items

- Count your total number of data samples and estimate memory requirements

- Determine if your data fits in RAM or requires distributed processing

- Assess data quality: how clean is it, how many missing values exist

- Identify the dimensionality: how many features or variables does each sample have

- Plan for future growth: will your dataset grow significantly, and if so, how quickly

Step 3: Define your timeline and resources

Time constraints and available resources are practical realities that influence your library choice. Some Python libraries for machine learning let you build working models in days, while others require weeks of development.

Your team’s expertise, your project deadline, and your infrastructure capabilities all matter. Be realistic about what you can accomplish with your current setup.

Action items

- Set a realistic deadline for your first working model

- Assess your team’s experience with different Python machine learning library options

- Evaluate available computing resources: CPU vs GPU, local vs cloud

- Consider training time: can you wait hours for model training, or do you need faster results

- Factor in maintenance burden: some libraries require more ongoing attention than others

Step 4: Consider production requirements

How your model needs to perform in the real world shapes your library choice significantly. A prototype that works on your laptop might fail completely when deployed to production.

Production systems have strict requirements around latency, scalability, interpretability, and reliability. Understanding these requirements upfront prevents costly refactoring later.

Action items

- Define latency requirements: how quickly must predictions be delivered

- Identify deployment environment: web server, mobile app, edge device, cloud platform

- Determine explainability needs: must you explain why the model made each decision

- Plan for updates: how often will you retrain your model with new data

- Check regulatory requirements: Does your industry require specific security or compliance measures

Step 5: Evaluate your team’s expertise and learning capacity

Your team’s current skill level and willingness to learn new tools significantly impact your success. Starting with a library that matches your team’s expertise accelerates development and reduces errors.

As your team grows and learns, you can adopt more specialized tools. There’s no shame in starting simple and upgrading later.

Action items

- Assess current experience: who on your team has prior machine learning experience

- Identify skill gaps: where will you need training or hiring ML developers

- Determine learning capacity: how much time can team members dedicate to learning new tools

- Consider knowledge retention: will knowledge stay with your team or leave when people do

- Plan for knowledge sharing: how will you onboard new team members to your chosen stack

Accelerate Your ML Development with Trusted Python Experts

Partner with Space O AI to pick the right libraries and build scalable machine learning models. We have delivered more than 500 AI projects across diverse industries.

Build Smarter Machine Learning Solutions with Space O AI

Choosing the right Python libraries is one of the most important steps in any machine learning project. The right tools can improve model accuracy, speed up development, simplify data workflows, and make your ML pipeline more efficient.

With so many libraries available today, understanding what each one offers and when to use it can make a major difference in the success of your project. This is where Space-O AI can support you. With more than 15 years of experience in machine learning engineering and over 500 AI projects delivered across multiple industries, our team understands the strengths and limitations of every major Python library.

Whether you need help selecting the right tools, designing a custom ML solution, or building an end-to-end machine learning product, we bring the technical expertise and real-world experience required to move your project forward with confidence.

If you are planning a machine learning application and want expert guidance on choosing the right Python libraries or developing a complete ML solution, our team is ready to help. Contact us today for a consultation and get a clear roadmap for your next machine learning project.

Frequently Asked Questions on Python Libraries for Machine Learning

Which Python library should I learn first?

Start with Pandas and NumPy. These form the foundation of data handling in machine learning with Python. Pandas teaches you how to load, explore, and prepare data, skills you’ll use in every project.

NumPy powers the mathematical operations underneath. Once comfortable with both (takes 2-3 weeks), move to Scikit-learn to learn actual machine learning algorithms. Only after mastering these should you explore deep learning libraries like TensorFlow or PyTorch.

Can I use multiple Python machine learning libraries in one project?

Absolutely. Using multiple Python AI library tools together is standard practice in real-world projects. A typical workflow uses Pandas for data preparation, NumPy for numerical operations, Scikit-learn for feature engineering, and XGBoost or TensorFlow for the actual model.

These machine learning libraries are designed to work together seamlessly. Think of it like a pipeline: each tool handles its specific job, then passes results to the next.

Which library handles the largest datasets best?

For classical machine learning, LightGBM is most efficient on datasets with millions of rows. It’s faster and uses less memory than XGBoost. For deep learning, TensorFlow and PyTorch can scale across multiple GPUs or machines using distributed training.

For truly massive data (billions of rows), consider Apache Spark with PySpark for preprocessing, then train with Python libraries for machine learning.

Are all Python machine learning libraries free?

Yes. NumPy, Pandas, Scikit-learn, TensorFlow, PyTorch, XGBoost, LightGBM, Keras, OpenCV, and Hugging Face Transformers are all completely free and open-source. You can build production systems without paying anything for software licenses.

This is one of Python’s major advantages. The best Python libraries for machine learning accessibility make it ideal for startups and enterprises alike.

Should I use a local library or a cloud-based AI service?

Local Python libraries for machine learning, like TensorFlow, give you control and work offline but require managing infrastructure. Cloud services (AWS SageMaker, Google Vertex AI) handle infrastructure but cost more.

For learning and prototyping, local tools are better. For production at scale, cloud services often make sense. Many successful teams use both strategically, based on their needs.# Python Libraries for Machine Learning: A Practical Guide for Developers (2025)

What’s the difference between Scikit-learn, XGBoost, and LightGBM?

All three work on structured, tabular data. Scikit-learn is easiest to learn and fastest to implement, but sometimes lacks accuracy. XGBoost delivers higher accuracy through advanced algorithms.

LightGBM is the fastest to train and most memory-efficient, ideal for huge datasets. Common approach: start with Scikit-learn, upgrade to XGBoost for accuracy, use LightGBM when speed matters most.

Need Help Choosing Python Libraries?

What to read next