As machine learning adoption grows across industries, teams are realizing that building a model is only the first step. The real challenge lies in taking that model from a research environment to a stable, scalable, and continuously improving production system. This is where an MLOps pipeline becomes essential.

The demand for structured and automated ML workflows is rapidly increasing. According to Grand View Research, the global MLOps market size was valued at USD 2.19 billion in 2024 and is projected to reach USD 16.6 billion by 2030, growing at a CAGR of 40.5%. This growth reflects how critical MLOps pipelines have become for companies that want to operationalize machine learning at scale.

An MLOps pipeline brings structure and automation to the entire machine learning lifecycle. It connects data ingestion, training, validation, deployment, and monitoring into a unified workflow that helps teams move faster while maintaining reliability. With an effective pipeline in place, organizations can reduce manual effort, improve reproducibility, and ensure that models remain accurate even as data evolves.

This blog contains our insights as an experienced machine learning development company to help you understand the MLOps pipeline. Understand what an MLOps pipeline is, how it works, the tools involved, and how you can build one that fits your business needs.

An MLOps pipeline is a structured and automated workflow that manages every stage of the machine learning lifecycle. It brings together data preparation, model development, testing, deployment, and ongoing monitoring into a single streamlined process. The goal is to ensure that machine learning models move from experimentation to production in a reliable, repeatable, and scalable way.

In traditional ML workflows, data scientists often build models in isolated environments, and deploying them into real applications becomes slow and error-prone. An MLOps pipeline solves this challenge by combining the principles of DevOps with machine learning practices. It creates an end-to-end system where datasets, experiments, model versions, and deployment environments are all managed through automation and standardization.

A typical MLOps pipeline includes steps such as data ingestion, feature engineering, model training, validation, version control, deployment to production, and continuous monitoring of model performance. Each stage is connected so that changes in data or performance can automatically trigger retraining or updates, which keep models accurate over time.

DevOps and MLOps sound similar because MLOps borrowed many principles from DevOps. However, they solve fundamentally different problems. Understanding these differences helps explain why MLOps requires its own specialized approach.

| Aspect | DevOps (Software) | MLOps (Machine Learning) |

| What Goes In | Source code | Data + Code + Models |

| What Comes Out | Deployed software application | ML system making predictions |

| Consistency | Same code = Same behavior every time | Same code ≠ Same results if data changes |

| What Changes | Code changes only | Code changes, data changes, model updates |

| Testing | Does the code compile? Does it have bugs? | Does the model perform? Has data quality changed? Is accuracy acceptable? |

| Key Challenge | Managing code versions | Managing code, data, and model versions together |

The fundamental insight is this: In DevOps, you control the system entirely through code. Write better code, and the system works better. In MLOps, your system’s accuracy depends just as much on data quality as it does on code quality.

MLOps addresses five critical business challenges that plague organizations without proper ML infrastructure.

Once built, MLOps pipelines run hands-off without manual intervention. New data automatically triggers ingestion, model retraining, performance testing, and deployment. Teams don’t manually trigger steps. Continuous monitoring detects problems within hours instead of weeks.

Every model stores complete metadata: training data, code version, parameters, and random seed. Run identical code with the same data and get identical results. This reproducibility is essential for regulatory audits and debugging production issues.

Version Control provides complete traceability. Every model version, every dataset, and every code change gets tracked in a centralized registry. You always know exactly which model is running in production.

If a problem occurs, you can rollback to a previous version in seconds rather than spending hours trying to figure out what went wrong. This creates an audit trail that satisfies compliance requirements.

Scalability means you don’t need to rebuild infrastructure for each new model. One pipeline manages one model or one thousand models equally well. Adding new models doesn’t require new infrastructure or new team members. You simply configure the pipeline to include the new model, and the system handles everything automatically.

Speed is the competitive advantage. Deploy models in hours instead of weeks. Your business responds to market changes faster than competitors. Your recommendation models improve weekly instead of quarterly. Your fraud detection adapts to new fraud patterns faster. These small weekly improvements compound over months and years into an exponential competitive advantage.

MLOps has become essential for any organization serious about machine learning because it creates value across every part of your business. Different teams benefit in specific, measurable ways.

MLOps means spending less time on repetitive manual work and more time on innovation. Your models actually reach customers instead of sitting in notebooks gathering dust. Your research and experimentation directly create measurable business value. Instead of waiting weeks for deployment, you see your work impact the business within days.

MLOps provides reproducible, testable ML systems instead of mysterious black boxes that nobody understands. You work with models like any other software component. Version control, testing frameworks, and deployment pipelines make models manageable and maintainable. You can integrate ML into applications with confidence rather than uncertainty.

MLOps means automated processes instead of manual deployments that fail at 3 AM. Clear monitoring tells you immediately when something breaks. You have playbooks for responding to problems rather than guessing at solutions. Incident response becomes systematic and reliable instead of chaotic and reactive.

MLOps delivers faster time-to-market, meaning new ML features reach customers weeks faster. Higher quality predictions improve customer experience and business outcomes. Lower operational costs come from automation replacing manual work. Competitive advantage emerges through faster model iteration that competitors can’t match.

MLOps results in better recommendations that are more relevant and timely. Faster fraud detection catches threats before they cause damage. More accurate predictions in healthcare, finance, and other domains directly improve outcomes. Customers experience increasingly personalized and trustworthy AI-powered services.

MLOps transforms machine learning from an experiment that “might work” into a reliable, scalable business capability. It’s no longer optional for serious organizations. It’s an operational necessity.

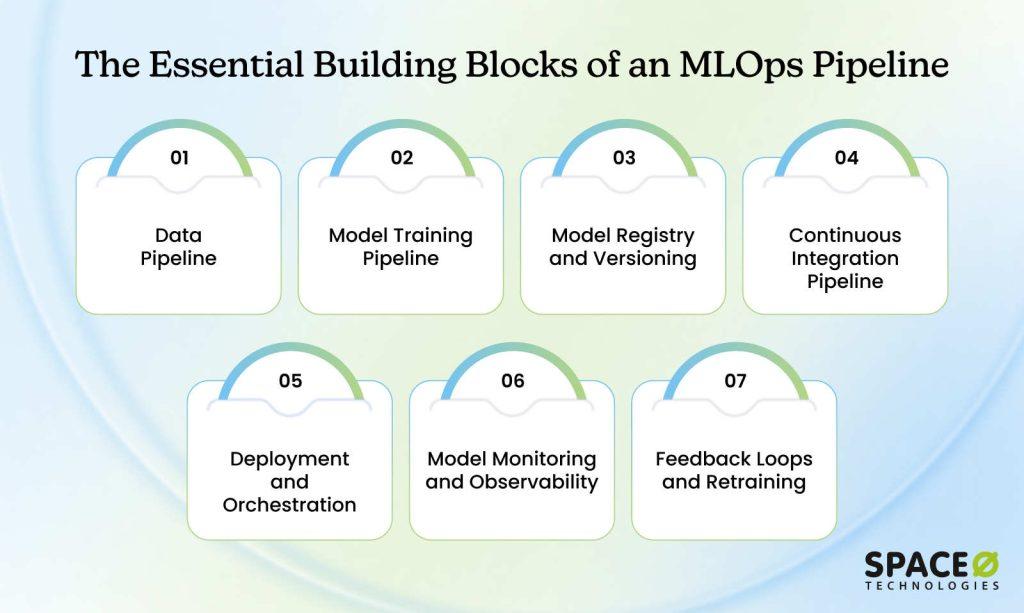

Now that you understand what MLOps is and why it matters, let’s explore how it actually works. Every MLOps pipeline is built on seven core components that work together to automate the entire machine learning lifecycle. Understanding these components will show you exactly how organizations transform from manual ML processes to automated, production-ready systems.

Build a Scalable MLOps Pipeline With Space-O AI

Create reliable, automated ML workflows with our machine learning development team. Get expert guidance on model training, deployment, and end-to-end pipeline setup.

An MLOps pipeline isn’t a single tool or service. It’s an orchestrated system of seven specialized components, each handling a critical part of the machine learning lifecycle. Together, these components automate everything from data preparation to model monitoring.

Understanding each component helps you see why MLOps transforms how organizations operate machine learning at scale.

A data pipeline manages your complete data lifecycle. It ingests data from multiple sources, validates quality, cleans inconsistencies, engineers features, and stores everything in a centralized location. All models access the same high-quality data from this single source.

This component automates model building. When new data arrives, it automatically trains different models, tests various algorithms, tunes parameters, and evaluates performance against accuracy thresholds. No manual intervention required.

A central repository that stores every trained model with complete metadata. You always know which model is in production, can rollback to previous versions instantly, and maintain a complete audit trail for compliance.

This component automatically tests models before deployment. It validates data quality, tests model performance, checks integration with existing systems, and ensures everything meets production standards. Only models that pass all tests proceed to deployment.

Handles the actual deployment of models to production. It packages models in containers, manages deployment to infrastructure, handles gradual rollouts to detect problems early, and can automatically roll back if issues occur.

Continuously watches model performance after deployment. It tracks accuracy metrics, detects when performance drops, identifies data drift, monitors system resources, and alerts the team immediately when problems occur.

Closes the feedback loop by collecting real-world outcomes, comparing them to predictions, identifying when retraining is needed, and automatically triggering new model training. This ensures models continuously improve.

These seven components don’t exist in isolation. They form a complete system where data flows through each stage, models get continuously updated, and problems get detected and fixed automatically. Each component feeds into the next, creating a self-improving machine learning operation.

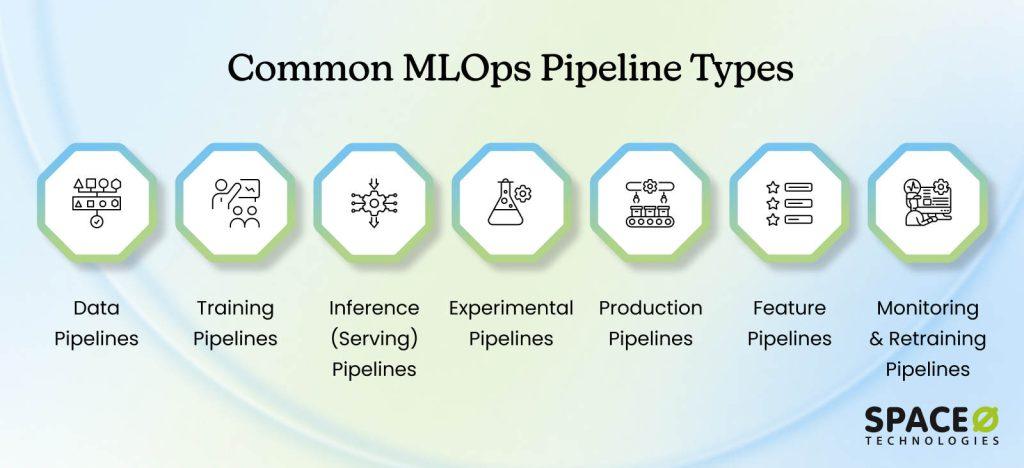

Now that you understand the MLOps components, it’s important to recognize that not all MLOps pipelines are identical. Different use cases require different types of pipelines optimized for specific purposes.

While these seven components form the foundation of every MLOps pipeline, organizations don’t build one-size-fits-all systems. Different use cases require different types of machine learning pipelines optimized for specific purposes. Understanding each type helps you design the right MLOps framework for your needs.

Purpose: Manage the complete data lifecycle from collection to features.

Data pipelines are the foundation of any machine learning pipeline. They extract data from multiple sources, validate quality, clean inconsistencies, and engineer features that models can learn from.

In a typical MLOps pipeline example, a data pipeline might collect customer behavior data daily, validate completeness, remove duplicates, and create features like purchase frequency and recency.

When to use: Always. Every MLOps framework needs a robust data pipeline.

Key benefit: Ensures all models train on consistent, high-quality data

Purpose: Automatically build and update ML models.

Training pipelines automate the model-building process. When new data arrives, they automatically train multiple models, compare performance, and register the best one. This is central to machine learning operations for organizations that need continuous model improvement.

When to use: When you want models to stay fresh with new data automatically.

Key benefit: Models improve continuously without manual intervention.

Purpose: Generate predictions from deployed models.

Inference pipelines make predictions available to applications. They come in two forms: online serving for real-time predictions through APIs, and batch serving for processing many records overnight. This is where MLOps solutions deliver actual business value to customers.

When to use: Always in production. This is how customers actually use your models.

Key benefit: Predictions reach applications and customers reliably.

Purpose: Support rapid iteration during model development.

Experimental pipelines are loose and flexible. Data scientists use them to try many approaches quickly without strict production controls. They’re essential during the exploration phase before moving to production.

When to use: During initial model development and research.

Key benefit: Enables fast learning and innovation.

Purpose: Reliable, monitored model serving in production.

Production pipelines are strict and controlled. They include comprehensive testing, monitoring, disaster recovery, and compliance controls. These represent industry best practices for organizations with real business risk.

When to use: Once models are validated and are creating real business value.

Key benefit: Reliable, compliant, monitored systems.

Purpose: Build and maintain features consistently across all models.

Feature pipelines compute features once and make them available to all models. Without feature pipelines, teams duplicate work and create inconsistencies. This is a core component of modern MLOps platform architecture.

When to use: Once you have multiple models sharing data.

Key benefit: Consistency and reduced duplication across models.

Purpose: Detect problems and trigger automatic fixes.

Monitoring pipelines watch model performance continuously. When accuracy drops or data patterns change, they automatically trigger retraining. This is essential for maintaining system quality over time.

When to use: Always in production. Continuous monitoring is non-negotiable.

Key benefit: Problems detected within hours, not weeks.

You’ve seen the different types of pipelines available. Now let’s see them in action. The following section walks through the complete workflow of an MLOps pipeline, showing you exactly what happens at each stage from data arrival to model deployment.

Accelerate Your ML Deployment With Our MLOps Expertise

Work with specialists who design production-ready ML pipelines that improve accuracy, speed, and scalability. Book a consultation with our AI experts.

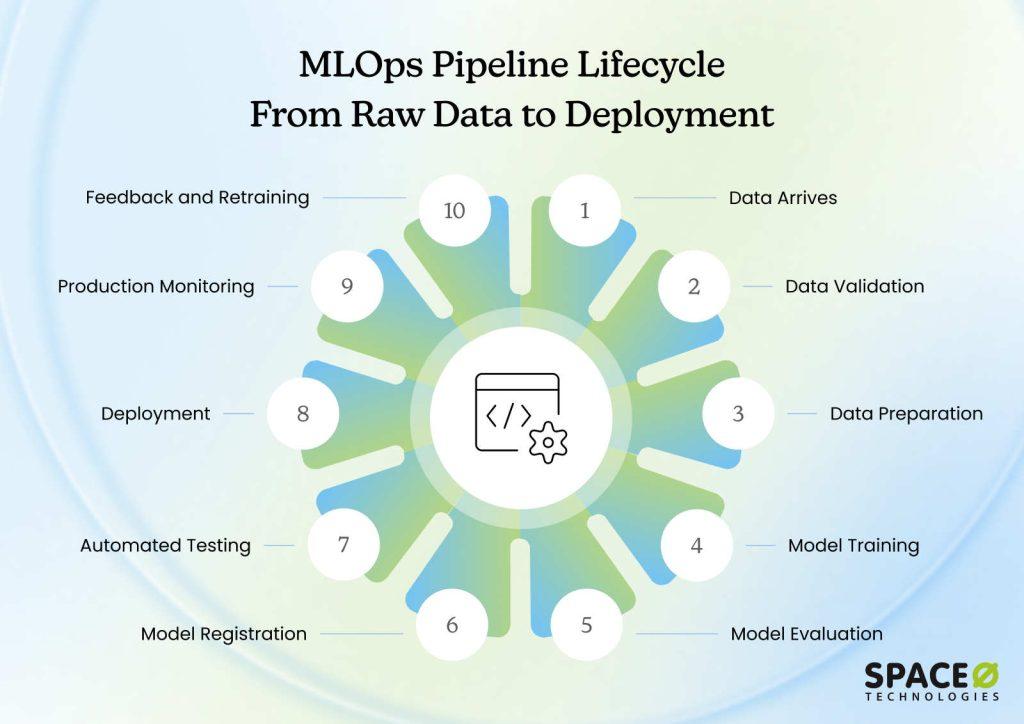

An MLOps pipeline follows a repeating cycle that automates machine learning from start to finish. Understanding each step helps you see how models go from raw data to production predictions, and how they continuously improve over time without manual intervention.

New data continuously flows into your business systems from various sources. Customer behavior, transactions, sensor readings, API feeds, or any other data your models require. The pipeline detects this new data automatically through scheduled triggers or event-based notifications and begins processing it immediately without waiting for manual action.

Before using the data, the pipeline validates its correctness and quality. It checks that all required fields are present, values fall within expected ranges, and there are no obvious quality issues like impossible dates or negative counts. Bad data gets flagged and isolated before it can corrupt your models or produce inaccurate predictions.

The pipeline cleans the data by handling missing values through imputation or removal, eliminating duplicate records, and standardizing formats across different sources. It then engineers features that models can effectively learn from. Raw timestamps become day-of-week indicators. Purchase history becomes customer value scores. Categorical variables get encoded into numerical representations.

The pipeline automatically trains models using the prepared data without human intervention. It may test multiple algorithms simultaneously, compare their performance metrics, tune hyperparameters, and select the best-performing model. This entire process happens on a schedule or is triggered by new data arrival, compressing weeks of manual work into hours.

The trained model gets tested rigorously on data it has never seen before to assess real-world performance. The pipeline checks if accuracy meets minimum standards, precision and recall are acceptable, and performance is consistent across different data segments. If the model doesn’t perform well enough, it’s rejected, and the training process restarts with different approaches or features.

If the model passes evaluation thresholds, it automatically gets registered in a central repository with complete metadata attached. Version number, training date, all accuracy metrics, exact data version used, code version, and hyperparameters are recorded. This creates a permanent audit trail enabling compliance, debugging, and understanding model lineage.

Before any deployment to production, the model undergoes comprehensive automated testing. Does it integrate seamlessly with existing systems and databases? Can it handle edge cases and unusual inputs gracefully? Is performance consistent under load? Only models that pass every test proceed safely to production environments.

The approved model gets packaged in a Docker container and deployed to production infrastructure. It doesn’t immediately serve all customer traffic for safety reasons. Instead, it starts handling only 10% of traffic (canary deployment) while monitoring systems watch closely for any performance degradation or unexpected behavior compared to the current production model.

Once deployed, the pipeline continuously monitors model performance in real-time production environments. It tracks accuracy metrics, response times, error rates, and whether input data patterns are changing significantly. If performance drops below acceptable thresholds or data drift is detected, automated alerts trigger immediately so teams can respond quickly.

The pipeline collects actual outcomes after predictions are made and stored by applications. It compares what the model predicted to what actually happened in the real world. When performance degrades significantly or new patterns emerge in data, it automatically triggers retraining with fresh data, starting the entire cycle again without manual intervention.

These ten steps repeat automatically on a continuous basis. Data arrives, models train, performance improves, deployments happen safely, and monitoring continues vigilantly. This endless cycle is what separates modern MLOps from traditional manual ML processes.

Instead of deploying once quarterly and hoping it works, MLOps enables continuous daily improvements that compound exponentially over time.

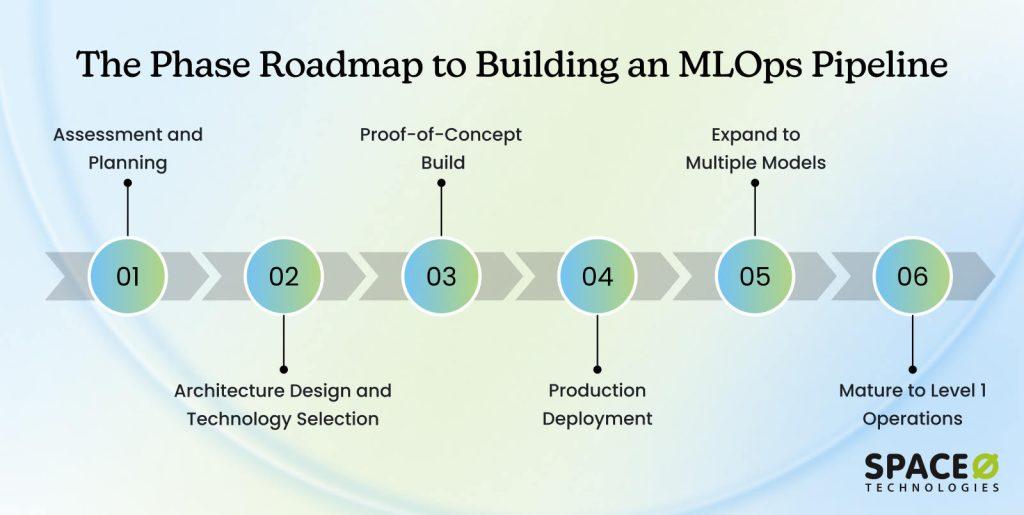

Building an MLOps pipeline isn’t something you do overnight. Organizations need a structured approach to implement these components successfully. Here’s a practical implementation roadmap that shows you exactly how to get started.

Building an MLOps pipeline doesn’t happen overnight. It’s a progression where you start simple, learn what works, then scale. Trying to do everything at once overwhelms teams and wastes resources. This roadmap breaks down the journey into six manageable phases spanning 4-6 months.

The first step is understanding exactly where your organization stands today and defining where you want to go. This clarity prevents wasted effort and ensures everyone moves in the same direction.

Document your current ML workflow from end-to-end, identify specific bottlenecks frustrating your team, define measurable success criteria, and secure budget and stakeholder alignment. This foundation shapes everything that follows.

Now that you understand your current state and goals, it’s time to design the architecture and choose tools. Your choices here determine how scalable and maintainable your MLOps system will be.

This phase involves deciding your maturity target, selecting your cloud platform, choosing core infrastructure tools, and creating simple architecture diagrams. Don’t get caught in tool analysis paralysis. Pick reasonable options that solve your current problems. You can always upgrade or change tools later as your needs evolve.

This is where you build your first working MLOps pipeline end-to-end. Keep the scope intentionally small with one model and one data source. You’re not building the final production system. You’re learning what works, what doesn’t, and what you missed. Focus on simplicity over perfection. By the end, you’ll have a complete working pipeline from data ingestion to deployment.

Now you move your proof-of-concept from staging to production, where it serves real customers. This phase requires adding layers of automation, resilience, and monitoring that weren’t necessary in the PoC.

Automate the entire deployment process, implement error handling and retry logic, add advanced drift detection, and use gradual rollout to minimize customer impact if problems occur. Production is fundamentally different from development. Users depend on your models. Reliability matters more than speed.

Your first model is now running in production reliably. The next phase scales this by deploying additional models using the infrastructure you built. Generalize your pipeline components into reusable templates so the second model deploys faster than the first, the third faster than the second, and so on.

Enable data scientists to deploy models with minimal engineering support by creating self-service tools and clear documentation. Over three months, you’ll go from one model to five or ten models running in production, all sharing the same reliable MLOps infrastructure.

By this point, you have multiple models in production and solid infrastructure. This final phase transitions from manual decision-making to truly automated operations where the system improves itself continuously. Implement automated retraining where new data automatically triggers model updates without human intervention.

Add A/B testing so every new model is tested against the current production model before deployment. Establish feedback loops connecting real-world prediction outcomes back to the training pipeline. Models learn from their mistakes automatically. The entire organization now operates at MLOps maturity Level 1.

This six-phase roadmap transforms manual ML workflows into automated systems. Most teams need to hire ML developers from an experienced ML development outsourcing agency to execute this process successfully. The key is starting small, learning each phase, and expanding deliberately. Organizations completing this roadmap gain competitive advantages that compound over time.

Understanding the roadmap is one thing. Executing it successfully is another. Many teams stumble not because the plan is wrong, but because they repeat common mistakes that could have been avoided. Here are the pitfalls that derail implementations, and how to avoid them.

Hire Our Expert Machine Learning Engineers to Build Your MLOps Pipeline

From data ingestion to continuous monitoring, Space-O AI helps you build a complete MLOps pipeline tailored to your product and infrastructure.

Building an MLOps pipeline follows a clear roadmap. But roadmaps don’t guarantee success. The real challenge is execution. Even teams with excellent plans stumble because they repeat mistakes that others have already learned from. These seven pitfalls appear in almost every implementation. Understanding them up front helps you avoid months of delays and wasted investment.

Teams try to build all seven pipeline types immediately instead of starting with essentials. This overwhelms resources, stretches budgets thin, and delays time-to-value. Organizations that try to do everything end up with nothing finished. The result is delayed deployments, frustrated teams, and wasted investment.

Your first pipeline doesn’t need to be perfect. It needs to work and teach you what you missed. Teams get stuck optimizing and polishing the PoC when they should be learning from mistakes. This perfectionism delays progress by weeks. By the time the PoC launches, requirements have changed, and learnings are outdated.

Teams consistently underestimate how much effort goes into data pipelines. Data ingestion, validation, cleaning, and feature engineering consume 60–70% of MLOps effort. Organizations that underestimate data work run out of budget mid-project or deliver systems built on poor data quality.

Operations teams maintain systems in production. If you exclude them from Phase 1 planning, they’ll resist your system when it goes live. Operations will be critical during Phase 4 and beyond, so building resistance early creates friction and delays. Their absence in planning means that monitoring and reliability requirements won’t be addressed.

Teams debate tools endlessly. AWS versus GCP. Airflow versus Prefect. MLflow versus Weights & Biases. This comparison paralysis delays actual progress by weeks or months. While teams evaluate options, competitors ship solutions. The best tool isn’t the one with the most features. It’s the one you actually use.

An MLOps pipeline automates your entire machine learning lifecycle from data ingestion through training, deployment, and continuous monitoring. Organizations implementing these systems deploy models in days instead of weeks, improve accuracy through automated retraining, and maintain reliability at scale. The competitive advantage comes from speed and operational excellence.

At Space-O AI, we specialize in building production-ready MLOps solutions tailored to your business needs. With 15+ years of AI expertise and 500+ successful machine learning projects delivered, our experienced team understands the unique challenges you face. We deliver fully functional MLOps pipelines that integrate seamlessly with your existing infrastructure and scale with your business growth.

We guarantee 99.9% uptime with enterprise-grade security, ensuring your systems remain reliable and compliant. Check our portfolio to see how we’ve helped global organizations transform their AI operations through innovative solutions and proven expertise.

AI Receptionist Development (Welco)

Space-O developed Welco, an AI receptionist using NLP and voice technology for a USA entrepreneur. The solution automates call management, appointment scheduling, and customer support. Welco achieved a 67% reduction in missed inquiries, delivering 24/7 multilingual support and reducing operational costs significantly.

AI Headshot Generator App

Our experts built an iOS app leveraging Flux LoRA and cloud GPU infrastructure for a Canadian entrepreneur. Users upload photos to create personalized AI models within 2-3 minutes, then generate professional headshots in 30-40 seconds. The app supports 15+ languages and multiple style options, including corporate, casual, and fantasy themes.

AI Product Comparison Tool

We developed an AI-powered comparison tool for an e-commerce consultancy using GPT-4 and vector embeddings. Users chat with an AI assistant to find product recommendations based on specifications. Features include chat history, multilingual support, an admin dashboard for analytics, and product management through CSV uploads.

Let’s discuss how MLOps can transform your machine learning operations and accelerate your competitive advantage. Get in touch with us today to start building your production-ready MLOps solution with our experts. Book your free MLOps assessment now.

Both approaches work depending on your situation. Build in-house if you have dedicated resources and want complete control. Partner with external experts for faster implementation, proven frameworks, and access to specialized knowledge.

Many organizations use a hybrid approach: hire experienced partners to design and implement the foundation, then build internal expertise for ongoing maintenance.

Data quality is critical because models are only as good as their training data. Implement comprehensive data validation checks for schema correctness, value ranges, completeness, and statistical anomalies.

Clean inconsistencies in formatting, handle missing values, and remove duplicates automatically. Allocate 60–70% of MLOps effort toward data pipeline work, including ingestion, validation, cleaning, and feature engineering.

Yes. Successful integration starts with understanding your current system architecture. Legacy systems may require middleware solutions to bridge to new infrastructure, while modern systems with APIs integrate more easily.

The best approach follows MLOps best practices: start by deploying new models through MLOps pipelines while keeping existing models running in parallel. Gradually migrate existing models as confidence builds. Document all integrations, maintain version control for models, implement automated testing before production, and monitor both old and new systems simultaneously during transitions.

Level 0 (manual) means everything is manual with quarterly deployments and no monitoring. Level 1 (automated training) adds automatic model retraining when new data arrives, daily or weekly deployments, and basic monitoring.

Level 2 (full automation) includes multiple automated pipelines, multiple daily deployments, advanced drift detection, automatic rollback, and a full A/B testing framework for every deployment.

Most organizations achieve positive ROI within 12–18 months. Budget estimate ranges from $200K–$500K depending on cloud platform, team location, and scope.

Returns come through faster model deployment, reducing labor hours, improved model accuracy through continuous retraining, operational cost reduction from automation, and a competitive advantage from faster iteration cycles.

Looking for MLOps Experts?

What to read next